Am Ostersonntag wollte ich eigentlich zum Markt beim Kloster Chorin, allerdings war die Schlange dann doch ein wenig lang. Halb so wild, was lag also näher, als in das benachbarte Plagefenn zu wandern. Es lag ein erstes Flair von Frühling in der Luft. Die Buchen haben zwar noch nicht ausgeschlagen, aber es war Bärlauch zu entdecken und erste zarte Triebe zeigten sich hier und da.

Pilgern von Henningsdorf nach Bad Wilsnack

(Geschrieben am 08.09.2022)

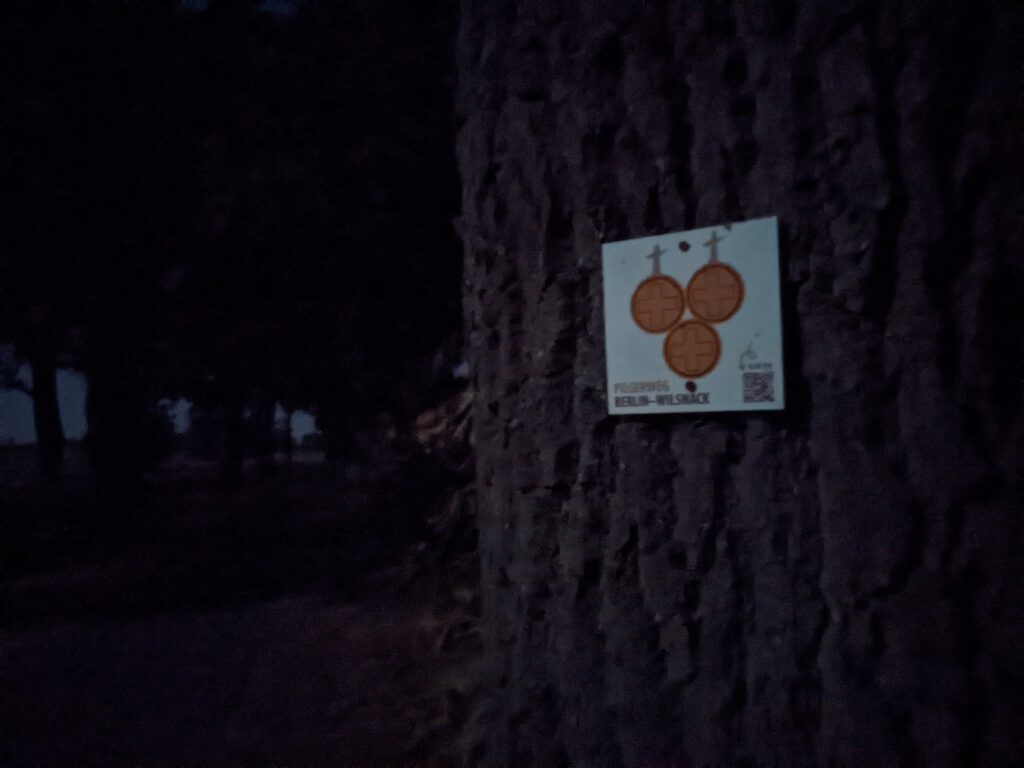

Bad Wilsnack war vom Ende des 14. Jahrhunderts bis ins 16. Jahrhundert wegen eines Hostienwunders ein wichtiges Pilgerziel in der norddeutschen Region. Während der Reformation wurden die Hostien verbrannt. Vor einigen Jahren jedoch wurde die Strecke zwischen Berlin und Bad Wilsnack als Pilgerweg reaktiviert. Während meiner Wanderungen auf dem Barnim und in der südlichen Uckermark sind mir immer wieder die pittoresken Feldsteinkirchen aufgefallen. Was lag also näher, als das Sportliche mit dem historischen Interesse zu verbinden und den besagten Pilgerweg zu versuchen. Also wurde flugs ein Pilgerpass beim Förderverein der Wunderblutkirche Bad Wilsnack bestellt und los ging’s am 10. August 2022 von Henningsdorf nach Flatow.

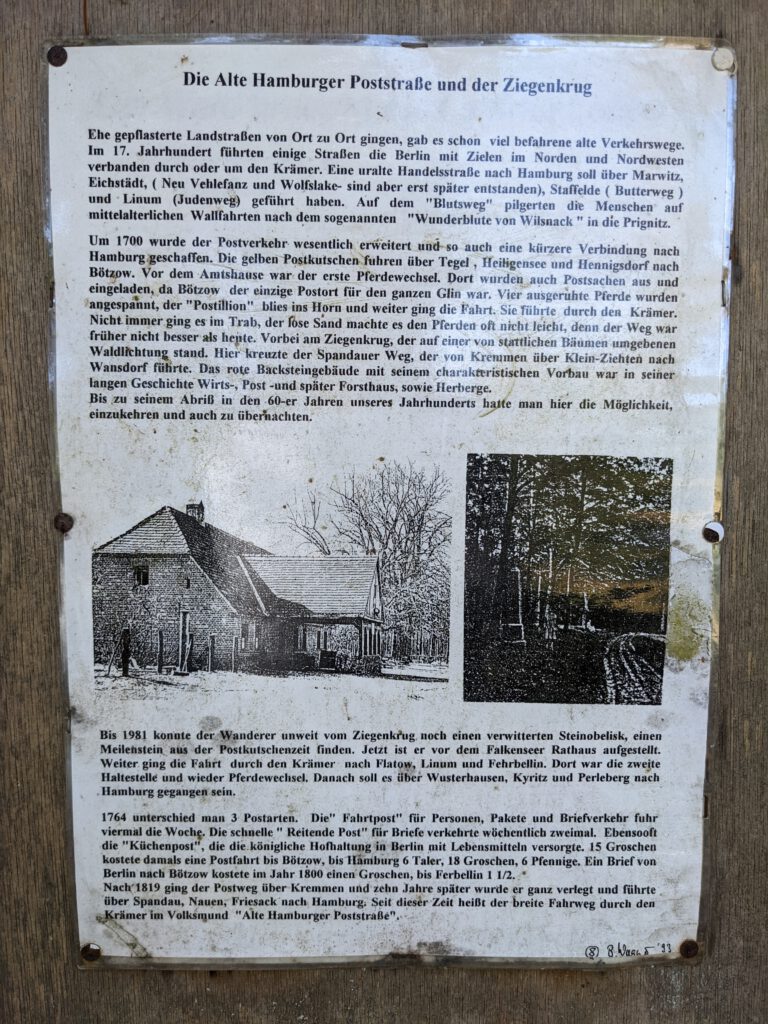

Die Strecke führt über Bötzow entlang der alten Hamburger Poststraße durch den Krämer Forst. Unterwegs lässt sich einiges Historisches entdecken, so zum Beispiel Hinweise auf die alte Raststation „Ziegenkrug“ oder die Legende um Reckin’s Eiche und Reckins Grab. Der Krämer ist ringförmig von Dörfern umgeben, diese Ringstruktur wurde leider durch den Autobahnbau zerschnitten. Nach Überquerung der Autobahn kam ich in Flatow an und wurde an der Kirche sehr freundlich von Herrn Kowalke empfangen. Nach dem Herrichten des Lagers im Andachtsraum gab es eine kurze Führung durch die sehr schönen Dorfkirche und einen Stempel in den Pilgerpass. Ein Blick in das Gästebuch im Gemeindehaus machte klar, dass dieses von Pilgerinnen und Pilgern gut und gerne genutzt wird und angeblich soll schon Friedrich der I. in einem der Räume übernachtet haben. Insgesamt habe ich mich sehr wohl gefühlt und war für die Koch- und Waschgelegenheit dankbar.

Am nächsten Morgen gab es leckeres Gebäck von Bäcker Guse und weiter ging es nach Hakenberg. Ursprünglich wollte ich nur nach Linum, aber ein Anruf bei Schwester Anneliese machte klar, dass die Strecke von Flatow nach Linum wohl etwas kurz sei („Wollen Sie nicht oder können Sie nicht?“). Ich solle es doch mal bis Hakenberg oder Fehrbellin versuchen, ohne es jedoch zu versäumen, in Linum beim Pfarrhaus vorbeizuschauen. In Linum angekommen, zeigte mir Schwester Anneliese die dortige Kirche und schenkte mir eines ihrer Fotos von den Staffelgiebeln der Kirche mit Storchennest. Leider hatte sie ihren Stempel verloren, so dass ich keinen Eintrag in den Pilgerpass bekam.

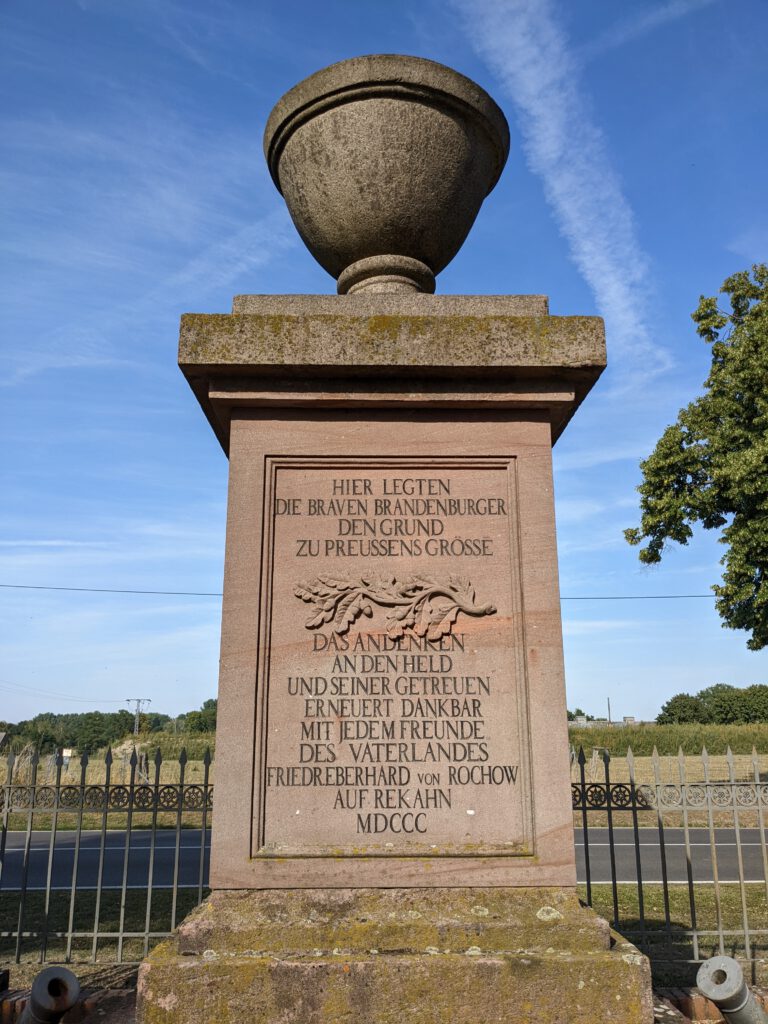

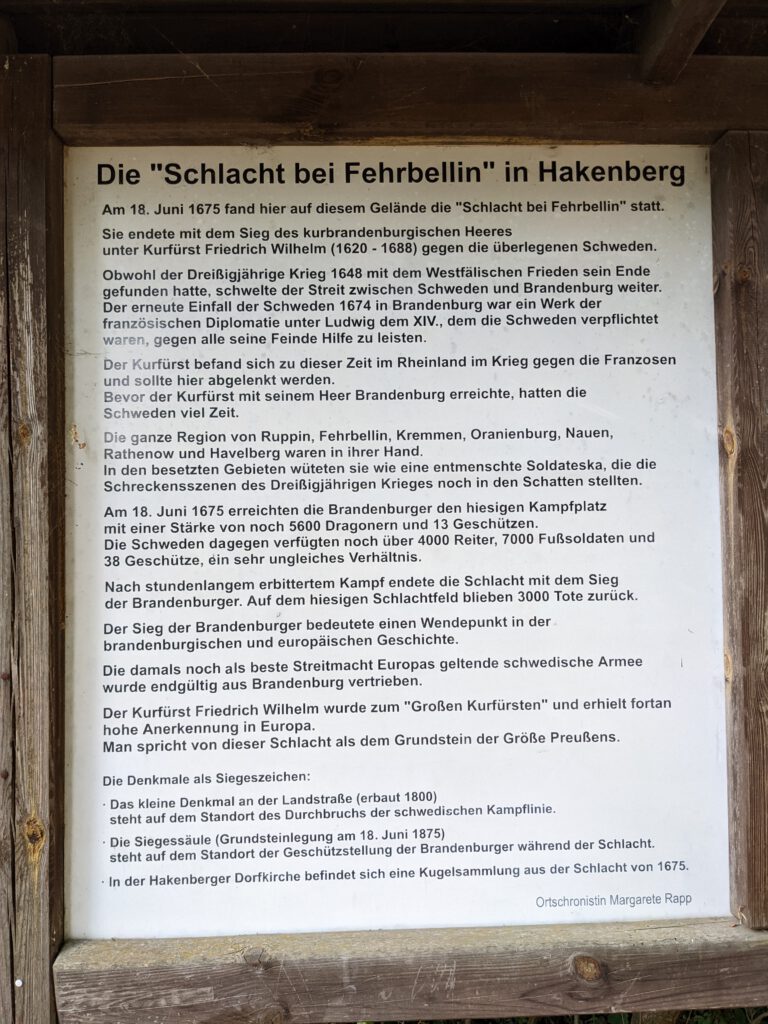

Hakenberg ist ein historisch interessanter Ort, weil die Schlacht von Fehrbellin ganz in der Nähe stattfand. Das dortige Pfarrhaus wird von Familie Knobloch bewohnt, in deren geräumigen Garten ich mein Zelt aufschlagen durfte. Ehrenamtlich kümmern sie sich um die Kirche und bieten auch Pilgernden eine Unterkunft. Am Abend ging es zur Siegessäule und im dortigen Wirtshaus gab es etwas Leckeres zu essen. Später wurde mir die Kirche mit einigen historischen Relikten aus der Schlacht (unter anderem Kanonenkugeln) gezeigt, und am Abend saß ich mit Familie Knobloch gesellig im Garten. In der Nacht fühlte ich mich von einem verspielten Schäferhund gut behütet und am Morgen gab es leckeres Spiegelei mit Zwiebeln von Herrn Knobloch höchstpersönlich zubereitet. Dann sollte es gestärkt nach Plan weiter bis Nackel gehen.

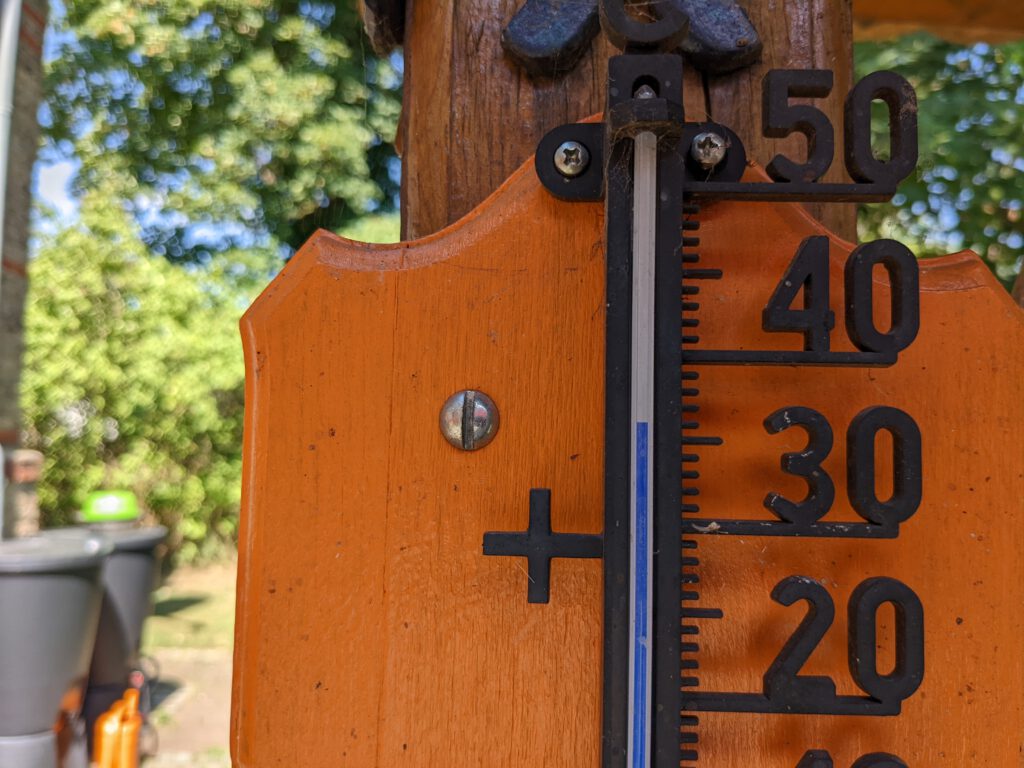

Der Weg über Fehrbellin war wegen der Hitze extrem anstrengend, besonders während der Passage durch das Luch hinter Fehrbellin fehlte so gut wie jeder Schatten und es war extrem heiß! In Garz angekommen wurde meine Wasserflasche von einem hilfsbereiten Garzer aufgefüllt und weiter ging es über einen fast vergessenen Feldweg nach Nackel, aber ich beschloss spontan doch noch weiter bis nach Barsikow zu gehen. Ein kurzes Telefonat hatte ergeben, dass die Pilgerherberge in der Kirche leer sei, der Konsum geöffnet habe und es Bier gäbe. Da waren die weiteren 4 Kilometer doch ein Kinderspiel. An diesem Tag habe ich meinen bisherigen Schrittrekord gebrochen (45335 Schritte). Die Entscheidung bis nach Barsikow zu gehen sollte sich als durchaus richtig herausstellen. Ich wurde freundlich von Frau Grützmacher empfangen und Herr Grützmacher zeigte mir die wunderbare Pilgerherberge im Kirchturm. Am Abend konnte der Pilgerhunger und -durst bei netter Gesellschaft mit Chili, Kartoffelsalat, Buletten und Bier im Konsum gestillt werde. Die Nacht in der Kirche war sehr ruhig und atmosphärisch. Selten habe ich so ruhig geschlafen. Ein Blick in das Gästebuch am Morgen zeigte, dass nicht nur ich von dieser Übernachtungsmöglichkeit beeindruckt war. Am Morgen gab es zudem einen Segen („Mit Segen läuft es sich gleich viel leichter!“) und weiter ging es bis nach Kyritz.

In Kyritz beschloss ich eine Auszeit zu nehmen und am Sonntag nicht zu wandern, sondern mich in Bluhm’s Hotel am Markt einzuquartieren und mir Kyritz mal genauer anzusehen. Nach einem leckeren Frühstück schaute ich aus Interesse beim Gottesdienst vorbei. Dies hatte ich schon länger nicht gemacht und es schien mir passend zur Pilgerthematik. Für die Größe der Kirche war die Gemeinde relativ klein. Beeindruckend fand ich das alte Taufbecken, auf dem vermutlich Jesus und Johannes der Täufer (Fellgewand) zu sehen sind. Nach dem Gottesdienst entspannte ich mich im Rosengarten und reckte die beanspruchten Glieder, als ein Mensch in Gärtnerstracht vorbeikam. Als ich ihn auf seine äußerst funktionelle Kopfbedeckung ansprach, die Hitze hatte mich dafür sensibilisiert, wurde ich sogleich zu einem Bier mit Freunden eingeladen. Nach interessantem Gespräch verbrachte ich den Nachmittag entspannt im Rosengarten, mit einer Stadtbesichtigung und auf dem Fest auf dem Marktplatz. Angesichts der Hitze entschloss ich mich, Kyritz schon in der Nacht gegen 2:00 Uhr zu verlassen, um bei angenehmeren Temperaturen zu wandern. Also ging es in der kühlen Dunkelheit weiter Richtung Söllenthin.

In Söllenthin kam ich in einem kleinen, alten Hof unter. Ganz wunderbar! Auch hier im Gästebuch hatten sich einige Pilgernde verewigt und ich fand es spannend, die unterschiedlichen Einträge zu lesen. Gott sei Dank regnete es noch an diesem Abend und ich konnte Störche beobachten, wie sie auf dem Kirchendach mit dem Wind spielten und fauchten. In der Kirche bewunderte ich die beeindruckenden Figuren des Altars und einen Stempel gab es auch.

Am letzten Tag ging es über Plattenburg nach Bad Wilsnack. Auf der Plattenburg war leider alles geschlossen und ich war froh, als ich in Bad Wilsnack angekommen war. Es ging sogleich zielstrebig in die offene Kirche, in der ich freundlich begrüßt wurde. Nachdem ich meinen Pilgerausweis gezeigt hatte, gab es eine ehrenvolle Überraschung! Außerdem stellte sich die Dame, welche die prächtige Kirche an diesem Tag betreute, als sehr sachkundig heraus und erzählte mir allerhand aus der Geschichte des Ortes. Dankbar am Ziel angekommen, trug ich mich in das Pilgerbuch in der Wunderblutkirche ein. Damit war die Pilgerreise abgeschlossen, und ich genoss noch einen schönen Abend in Bad Wilsnack, bevor es am nächsten Tag mit der Bahn in nur einer Stunde und 30 Minuten wieder zurück nach Berlin ging.

Insgesamt bin ich froh, die Wanderung gemacht zu haben und festzustellen, dass ich durchaus in der Lage bin, 130 Kilometer in 5 Etappen zu laufen. Ich hätte auch noch weiter laufen können, aber ich wollte es nicht gleich übertreiben. Besonders im Gedächtnis geblieben sind mir die Gespräche und Begegnungen mit den unterschiedlichen Menschen auf dem Weg. Gedankt sei auch den Gemeinden und Menschen, die solch eine Pilgerreise möglich machen. Auch die Kirchen sind wirklich interessant, beherbergen so einige Kulturschätze und werden von den Gemeinden gepflegt.

Denjenigen, die planen, sich von Berlin aus auf den Weg nach Bad Wilsnack zu machen, empfehle ich unbedingt vorab das Outdoor Handbuch „Mittelalterlicher Jakobsweg Berlin – Wilsnack – Tangermünde“ von Rainer und Cornelia Oefelein (4. Auflage) zu konsultieren. Weitere nützliche Informationen finden sich auf der Seite www.wegenachwilsnack.de. Ich fand den Weg nicht einfach, aber ich denke, wer den ersten Abschnitt von Henningsdorf nach Flatow durch den Krämer bewältigt, schafft auch den Rest. Ferner ist es günstig, unterschiedliche Kontaktadressen (siehe Buch und Webseite) bereit zu haben, falls die Übernachtungen und Streckenabschnitte nicht schon im Voraus geplant wurden. Würde ich den Weg nochmal gehen? Auf jeden Fall, zumal ich jetzt ein wenig mit den Gegebenheiten vertraut bin. Andererseits bietet es sich auch an, die Strecke von Bad Wilsnack nach Tangermünde weiterzugehen.

Von Henningsdorf nach Flatow

Von Flatow nach Hakenberg

Von Hakenberg nach Barsikow

Von Barsikow nach Kyritz

Von Kyritz nach Söllenthin

Von Söllenthin nach Bad Wilsnack

Rundwanderung Angermünde – Wolletz – Altkünkendorf – Angermünde

Bei dieser Wanderung war die Strecke entlang des Nordrandes des Grumsiner Buchenwaldes (UNESCO Weltnaturerbe) als Höhepunkt der Tour geplant, aber auch die anderen Abschnitte waren sehr schön und sehenswert. Um die Wanderung etwas abwechselungsreicher und herausfordernder zu gestalten, verlief ein Teil der Strecke auf dem Märkischen Landweg entlag des Nordurfers des Wolletzsees über Wolletz nach Altkünkendorf, was sich als gute Entscheidung erwiesen hat. Besonders der Abschnitt zwischen dem Strandbad und Wolletz war wegen der Nähe zum See und der vielfältigen Flora und Fauna sehr schön. Sehr sehenswert waren auch die alte Eiche und die Kirche in Altkünkendorf. Der angenehme Rastplatz beim Informationszentrum lud zu einer Pause ein. Der Buchenwald selbst hat mich zunächst nicht so beeindruckt. Reine Buchenwälder wirken auf mich meist etwas parkartig und seltsam still. Aber dennoch waren die hohen Bäume mit dem frischen Grün und das Spiel des Lichts auf dem Waldboden dann doch recht atmosphärisch. Auch der leichte Anstieg auf dem Abschnitt war erfrischend. Insgesamt war es eine sehr schöne Wanderung, die sich stimmungsvoll mit einem Gewitterregen zu Ende neigte. Ferner stellte ich einen persönlichen Streckenrekord auf! Bewusst wanderte ich an einem Tag noch nie eine Strecke von 30,5 Kilometern.

Rundwanderung Chorin-Brodowin-Chorin

Der Weg führte vom Bahnhof Chorin zum Kloster. Von dort aus ging es über Kopfsteinpflaster durch den Wald ins Naturschutzgebiet Plagefenn. Die Kernzone des Naturschutzgebiets um den großen und kleinen Plagesee steht unter besonderem Schutz und darf nicht betreten werden. Der erste Teil der Wanderung war recht nass und regnerisch. Durch die Nässe kamen das Kopfsteinpflaster, das moorige Gebiet und das frische Grün der Buchen besonders gut zur Geltung. In Brodowin empfing uns – ich war mal wieder mit Harald unterwegs – der Duft von frisch geräuchertem Fisch. Im luxuriösen Hofladen gab es alles, was das Herz begehrt. Nach einer kleinen Pause mit regionalen Spezialitäten ging es auf dem Denglerweg – ein Teil des Jakobswegs – zurück zum Kloster und schließlich zum Bahnhof Chorin. Ich finde die Gegend empfiehlt sich auch für einen längeren Aufenthalt, wenn es die Lage erlaubt. In Brodowin gibt es sicherlich einiges über Landwirtschaft zu lernen und fünf Wanderungen durch die reizvolle Gegend werden angeboten.